Experimental porting of the etnaviv Linux DRM driver

In this series of posts I am going to elaborate on porting the etnaviv driver to Genode and what this effort did entail. The first post is about dealing with the Linux DRM driver and briefly skims over the process.

Teaser

As mention on our road map for 2021, experimentation with ARM GPU drivers was planned for the first half of the year.

Well, let us dive right in with a small teaser of the the current state of affairs:

|

|

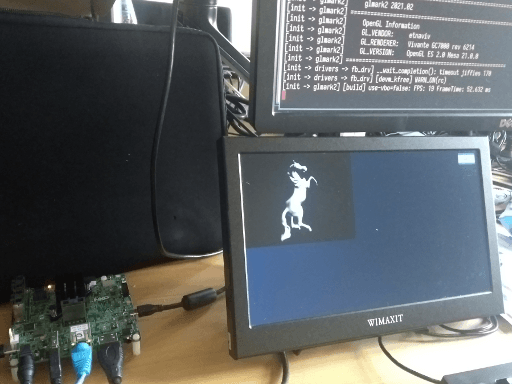

Glmark2 running on base-hw on a i.MX-8MQ-EVK board

|

This photo shows glmark2 running on a iMX8MQ-EVK board using base-hw. The code is available on my WIP branch (for glmark2 this branch is additionally needed). The glmark2-etnaviv run script contains all components to run it on the EVK. As it is still work-in-progress of few limitations are in place (namely all buffer-objects are allocated using NC memory and the EGL/Mesa/etnaviv is some what messy), which influences the render and blitting performance.

That being said, how did we end up here?

Introduction

We already tinkered with GPU acceleration on Genode in the past, be it porting the Intel i915 Gallium driver back in 2010 or later in 2017. implementing an GPU multiplexer for Intel Gen8 (Broadwell) GPUs.

Both times, however, the effort was focused on a particular type of device - namely the ones used in our laptops on which we develop Genode. For better or worse such a feature is not strictly necessary to get the job done so to speak and those systems are beefy enough to get away with rendering user-elements (Qt5/QML using swrast) in software. In the end, both attempts classified more as a proof-of-concept that did not see much use besides firing up the demos once in a while.

Fast-forward a few years where Sculpt is now a solid base for commonly performed tasks and we can now focus one or the other amenity. We are also trying to prepare Sculpt for other platforms as well - the MNT Reform and the Pinephone spring to mind - having working GPU-acceleration is not only an amenity but becomes a necessity. Where one in general could be more generous on a common x86-based laptop with spending CPU cycles, that is not so much the case on more constrained device.

With our early excursions with regard to GPU-acceleration in mind, we set out to explore what lies beyond in the world ARM SoCs. In this case we did not have to venture far and stopped short at the iMX8MQ SoC. We already have solid support for the platform in Genode and more importantly it is the SoC used in the MNT Reform.

The SoC features a Vivante GC7000L GPU for which there exists a reverse engineered open-source DRM driver called etnaviv that is maintained by the Linux community. The kernel part is accompanied by the corresponding user land part in the Mesa 3D graphics stack.

The first order of business is to get the baseline. This means running Linux and rest of the graphics stack on hardware and so we picked the i.MX-8MQ-EVK board. At this time Linux-fslc-5.4.2.3 was the latest stable kernel version so we choose that. For the user land we picked voidlinux because it is easy to deal with and we were not eager to build a test user land from scratch. This boiled down to

1. building Linux-fslc-5.4.2.3 (imx_v8_defconfig) 2. extracting the archive to the SD card 3. populating /boot on the SD card 4. creating a agetty-ttymxc0 script to get access via the serial console (not strictly necessary but nice-to-have)

The first stumbling block was getting the kernel to boot. It got stuck every time we tried. The kernel version we in the past on this board is version 4.12.0. This kernel, however, is too old for our use-case. We than tried to boot 4.12.2 that is the newest version out of this series. Well, that did not boot either and apparently got stuck at the some point as 5.4, judging by the kernel messages. The culprit seemed to be the busfreq driver. We traced it back to a arm_smccc_smc() call that did not return (“well, a bus access that does not return - I am not that eager to debug that at the moment”). We jumped the gun and build a newer version of u-boot for the EVK in hopes that it might solve the issue.

I did not.

Do we need a functioning busfreq driver? Definitely, maybe … probably not…

imx8mq_evk.dtsi → busfreq : status = "disabled";

Well, the kernel boots now. Yeah… let us deal with that some time in the future.

As we were using imx_v8_defconfig as starting point we disabled the mxc/viv_gpu driver so that etnaviv is used always. Additionally we also built a minimal Mesa library containing only the etnaviv back end and as platform enabled DRM and Wayland only:

git clone --depth 1 --branch mesa-21.0.0 https://gitlab.freedesktop.org/mesa/mesa.git

meson build -Dplatforms=wayland -Ddri3=enabled -Dgallium-drivers=swrast,etnaviv,kmsro \

-Dgbm=enabled -Degl=enabled -Dbuildtype=release -Db_ndebug=true \

-Dgles2=enabled -Dglx=disabled -Degl=enabled

ninja -C build

Last but not least we compiled glmark2.

Having built everything manually makes debugging or rather correlating why things turn out different on Genode easier to diagnose. It is especially useful to instrument how the kernel and user land interact, e.g., which I/O controls are used and when:

LD_PRELOAD="/foo/lib/libEGL.so /foo/lib/libGLESv1_CM.so /foo/lib/libGLESv2.so /foo/lib/libgbm.so /foo/lib/libglapi.so /foo/lib/libvulkan_lvp.so" LD_LIBRARY_PATH="/foo/lib" LIBGL_DRIVERS_PATH="/foo/lib/dri" EGL_LOG_LEVEL=debug LIBGL_DEBUG=verbose strace -o glmark2.log ./glmark2 2>&1|tee glmark2.log

The log files are then exposed to the usual awk/grep/sed/… scrutiny.

With the baseline recorded and having checked that the graphics stack indeed works on the target system it was time to bring it to Genode.

At this point also I examined the device-tree and gathered all needed information about the vivante,gc device. After all we have to reference this information and Genode as well.

Porting the DRM driver

The rough plan is obvious: porting the DRM driver and eventually updating our version of Mesa. After all we were still using a by now ancient Mesa 11.2. Much of the complexity in the graphics stack lies in the user land part, still porting the DRM driver turned out to be more time-consuming in the past. That was mostly due to the way we extracted drivers from Linux by utilizing our DDE Linux approach (I am paraphrassing here):

“Pick the minimal amount of source-code from Linux to get the job done, try to patch the contrib code as little as possible and built an emulation layer that mimics the rest of Linux in the most straightforward way.”

Naturally, that is easier said than done, especially if you have to debug dubious behavior of the ported driver after the fact because you implemented some semantics of the Linux API slightly wrong. Mapping memory allocated via kmalloc for DMA purposes later on did not play nice as we employed two distinct memory pools for DMA and non-DMA memory - so the contrib code got sprinkled by a LX_DMA_MEM flag where necessary in the kmalloc invocations. Also the details on how the DDE was employed changed over time but on the surface it was more or less the same.

On that account Stefan´s most recent endeavors were a welcoming change - his “DDE Linux experiments” series is a recommended read - as we were able to benefit from them immensely.

As a recap: when porting a Linux driver we usually start by taking a look at the driver code itself and create a Makefile that references these source files. In an iterative process we either pull in more contrib code - mostly header files to get the data structures and declarations or stub the missing things with our own emulation code every time we encounter a compiler error. Depending on which additional contrib code is pulled in, new errors will pop up and the cycle repeats. Eventually we end up at the linking stage and are greeted with one or the other undefined reference. Those are resolved by providing a dummy implementation for the reference. After the code has been compiled and linked successfully the interesting work begins. The component is in no way able to perform its duties yet due to the sidestepping of functionality though. Nevertheless, this rinse and repeat cycle is time consuming and honestly quite boring.

Here is where Stefan's create_dummies tool comes in handy. However, for it to perform its magic we have to alter the porting approach. We still start by identifying the needed driver code but afterwards we work with a Linux source tree that is pre-compiled and has its configuration slightly altered to be more in favor of our approach. In particular we disable module loading support so that everyone will end up in the kernel binary because this dynamic mechanism makes cross-referencing more difficult. (For obvious reasons checking that the modified kernel still works is mandatory.)

The reason behind that is, as you might have guessed already, instead of creating the header files ourselves, we will use the contrib header files directly. Since Linux generates some files during building, e.g. include/generated, we need the pre-compiled tree to provide us with those too.

By going down this road you end up much faster at the fore mentioned linking step in the overall porting effort. At this point I used create_dummies:

./tool/dde_linux/create_dummies TARGET=drivers/gpu/etnaviv \

LINUX_KERNEL_DIR=/…/linux-fslc-5.4-2.3.x-imx/ show

This command shows you all the undefined references and by pulling in more contrib code this list changes. Skimming through the symbols and checking back the contrib code indicates where it is reasonable to keep the contrib code and where to fallback to implementing the missing functionality. The big improvement over the old approach is that using the tool is a matter of seconds, so one can really play around and see what pops up and what goes away.

In the end we settled on pulling in some parts of the DRM subsystem. The reasoning behind this decision is we were not eager to implement the whole memory management by ourselves at the moment. After all this is merely an experiment for now.

Eventually it is time to make the linking step succeed. Again we used the create_dummies tool but this time changed the operation:

./tool/dde_linux/create_dummies TARGET=drivers/gpu/etnaviv \

DUMMY_FILE=/…/src/drivers/gpu/etnaviv/generated_dummies.c \

LINUX_KERNEL_DIR=/…/linux-fslc-5.4-2.3.x-imx/ generate

This will check the list of undefined references and will look up the declarations of functions, (global) variables and so one and generate a matching dummy definition:

#include <linux/seq_file.h>

loff_t seq_lseek(struct file * file,loff_t offset,int whence)

{

lx_emul_trace_and_stop(__func__);

}

The body of functions contains a call to lx_emul_trace_and_stop( func ) that, as the name implies, generates a LOG message and stops the execution when its called.

At this point there is a driver component that in theory should be executable. Of course we have to somehow poke the kernel code to make it do something useful. In the past we called the various initialization and probing functions manually. Since we on one hand have the whole kernel at our disposal now and on the other cannot easily influence things like before, we have to some degree play by the kernel's rules. That being said, we still can manipulate the kernel to be more in line with our needs. Most prominently by shadowing header files by adjusting the the include path and include order.

For example by shadowing <linux/init.h> and providing an implementation for the registration of the various initcalls, we end up with a list of functions that when called in the proper order, will initialize most of the subsystems needed for running the etnaviv driver. Traversing the list and executing the initcalls is the first thing that is done after the startup of the driver component.

—

This way of shadowing kernel headers is particular helpful because we must shadow certain header files that contain inline functions with inline assembly, e.g. asm/current.h:

#pragma once struct task_struct; /* * The original implementation uses inline assembly we for obvious * reason cannot use. */ struct task_struct *get_current(void); #define current get_current()

—

Naturally, when executing the initcalls one or the other generated dummy function is called. Eventually all is in order, the component starts and the contrib code shows some diagnostic messages like Linux normally would. To get this far we made our life a little easier by re-using parts of the already existing lx_kit from dde_linux repository. That is were the fore mentioned hybrid approach comes in. Rather than Stefan we opted for reusing the existing emulation code. In particular that means parts of the timing, interrupt, thread and memory handling back ends. However, we do not use the lx_impl layer that sits on top of the lx_kit.

Since we learned the hard way that it is best to keep the Linux' C and Genode's C++ world isolated we implemented a translation layer where the Linux data structures are either treated as opaque or are converted explicitly.

For example there is

int lx_emul_mod_timer(void *, unsigned long);

that as the name suggests represents mod_timer and is used by the C side of things. The timer object itself is handed in as opaque pointer and is stored in some internal timer handling registry. When the timeout for this timer triggers, the C++ back end code will call

void genode_emul_execute_timer(void *);

where the opaque pointer is converted back to the Linux timer object. Rather than the old lx_impl layer this conversion layer does not implement Linux APIs directly but provides a set of building blocks. For implementing the functionality we used Linux primitives that utilize these building blocks. That is mostly done to speed up development. After all, we now have all the normal header files at our disposal and we are not trying to build a reusable emulation environment anyway.

At this point it is time to stimulate the etnaviv driver somehow. As the driver relies on the device-component API hooked up via the OF API, i.e. the device-tree, we had to find out how all those devices and platform-devices are managed and created. Using the pre-compiled Linux kernel on target came in handy. Whenever we wanted to instrument how the order of things is we would instrument the kernel by placing BUG() at the location of interest:

… [ 4.002803] Call trace: [ 4.005251] etnaviv_gpu_bind+0x0/0x10 [ 4.009000] etnaviv_bind+0x10c/0x1a8 [ 4.012662] try_to_bring_up_master+0x2b4/0x32c [ 4.017190] __component_add+0xbc/0x160 [ 4.021025] component_add+0x10/0x20 [ 4.024599] etnaviv_gpu_platform_probe+0x254/0x290 [ 4.029477] platform_drv_probe+0x50/0xa0 [ 4.033485] really_probe+0xd8/0x350 [ 4.037058] driver_probe_device+0x54/0xf0 [ 4.041154] __device_attach_driver+0x80/0x120 [ 4.045597] bus_for_each_drv+0x74/0xc0 [ 4.049431] __device_attach+0xe8/0x160 [ 4.053266] device_initial_probe+0x10/0x20 [ 4.057448] bus_probe_device+0x90/0xa0 [ 4.061284] deferred_probe_work_func+0xa0/0xd0 [ 4.065816] process_one_work+0x1b0/0x310 [ 4.069824] worker_thread+0x230/0x420 [ 4.073572] kthread+0x140/0x160 [ 4.076800] ret_from_fork+0x10/0x1c [ 4.080378] Code: 2a2003e0 531f7c00 d65f03c0 d503201f (d4210000) …

That provided us with the information on how the various parts interacted with each other. As the etnaviv driver already did register itself, all that was needed was creating a platform device and probing the platform bus.

—

At first we pulled in more of the contrib code dealing with the device tree and handling of the platform management but eventually decided to only implement the bare minimum as the requirements of the driver are small due to the fact that the device has not that many dependency to other parts of the SoC and we were unsure of the inter-dependency of the contrib code.

—

So basically after the component starts, we initialize the Genode back end infrastructure, execute the kernel initcalls and setup the platform device.

void Lx_kit::initialize(Genode::Env & env, Genode::Allocator &alloc)

{

static Genode::Constructible<Lx::Task> _linux { };

Lx_kit::Env & kit_env = Lx_kit::env(&env);

env.exec_static_constructors();

Lx::malloc_init(env, alloc);

Lx::scheduler(&env);

Lx::Irq::irq(&env.ep(), &alloc);

Lx::timer(&env, &env.ep(), &alloc,

&_jiffies, &hz);

Lx::Work::work_queue(&alloc);

_linux.construct(_run_linux, reinterpret_cast<void*>(&kit_env),

"linux", Lx::Task::PRIORITY_0, Lx::scheduler());

Lx::scheduler().schedule();

}

The Lx_kit::initialize() function is directly called from Component::construct() and sets up the linux task, which handles the initialization:

static void _run_linux(void *args)

{

Lx_kit::Env &kit_env = *reinterpret_cast<Lx_kit::Env*>(args);

lx_emul_init_kernel();

kit_env.initcalls.execute_in_order();

lx_emul_start_kernel();

while (1) {

Lx::scheduler().current()->block_and_schedule();

}

}

As a recap: we run all contrib code in the context of an Lx::Task object and the task mechanism provides us with the necessary hooks to make cooperative scheduling decisions.

We mentioned the device-tree before when acquiring the baseline - the driver wants access to specific clocks and I/O memory. On Genode the platform specific platform-driver is in charge of providing this information. So we added the following snippet to the platform driver's configuration in the drivers_managed-imx8q_evk raw archive:

<device name="gpu" type="vivante,gc">

<io_mem address="0x38000000" size="0x40000"/>

<irq number="35"/>

<power-domain name="gpu"/>

<clock name="gpu_gate"/>

<clock name="gpu_core_clk_root"

parent="gpu_pll_clk"

rate="800000000"

driver_name="core"/>

<clock name="gpu_shader_clk"

parent="gpu_pll_clk"

rate="800000000"

driver_name="shader"/>

<clock name="gpu_axi_clk_root"

parent="gpu_pll_clk"

rate="800000000"

driver_name="bus"/>

<clock name="gpu_ahb_clk_root"

parent="gpu_pll_clk"

rate="800000000"

driver_name="reg"/>

</device>

Getting access to this kind of information is simply done by calling Platform::Connection::device_by_type("vivante,gc").

Making the DRM API available

With the driver component up and running the next step is using the driver. On Linux one uses the DRM API to do that. Since we pulled the DRM subsystem in already, it seems reasonable to follow suit.

Normally, from a user land perspective, that would involve opening of a device file, e.g. /dev/dri/cardN and using ioctl(2) to interact with the device - let see what is needed by the driver in kernel.

After going through the layers in kernel we end up in

static int etnaviv_open(struct drm_device *dev, struct drm_file *file);

where we have to pass in a struct drm_device and a struct drm_file. The drm_device is already created via the DRM subsystem, which leaves us with providing the drm_file. Internally this object stores a struct file that in return is needed later on to perform I/O controls:

long drm_ioctl(struct file *filp, unsigned int cmd, unsigned long arg);

With that in place we tried to execute a simple device-specific I/O control, namely ETNAVIV_PARAM_GPU_MODEL by adding the following code to the lx_emul_start_kernel() function:

#include <drm/etnaviv_drm.h>

extern int lx_drm_ioctl(unsigned int, unsigned long);

int lx_emul_start_kernel()

{

[…]

struct drm_etnaviv_param param = {

.pipe = 0,

.param = ETNAVIV_PARAM_GPU_MODEL,

.value = 0,

};

lx_emul_printf("%s: query gpu model param: %px\n", __func__, ¶m);

err = lx_drm_ioctl(DRM_IOCTL_ETNAVIV_GET_PARAM,

(unsigned long)¶m);

if (err) {

return err;

}

lx_emul_printf("%s: model: 0x%llx\n", __func__, param.value);

[…]

}

The lx_drm_ioctl function is merely a wrapper over drm_ioctl that additionally performs some kind of (un-)marshaling of the request data structures (more of that in a bit). That being said, I was greeted by the following LOG messages:

[init -> imx8q_gpu_drv] lx_emul_start_kernel: query gpu model param: 403fef58 [init -> imx8q_gpu_drv] lx_emul_start_kernel: model: 0x7000

Nice. It goes without saying that the kernel spoiled the fun by already printing the model and revision to the LOG but we nevertheless called the into the driver and did not crash (yet) in the process.

Exporting the DRM API

Alright, we now have a driver component that does things when instructed. Unfortunately this interface is purely component-local - we cannot use it from the outside. However, as mentioned in the beginning of this post, we already made a remote interface work in the past. The most recent implementation uses the Gpu session that is an interface specifically tailored to Intel GPUs. It allows for allocating and mapping of buffer-objects, either in a PPGTT or the GGTT, as well as triggering the execution of batch-buffers. This session is used in our libdrm i915 back end.

Besides the Gpu session, there was the ad-hoc designed Drm session that we came up with during the hack'n'hike in 2017. It is ad-hoc in the sense that at the time we extended the intel_fb driver - a port of the Linux DRM/KMS driver for driving displays with Intel GPUs - to drive the render-engines as well and thereby provide 3D acceleration. In this case we used the DRM API of Linux directly and this Drm session was simply an remote interface of that API. Each request was transported from the client to the driver via Genode's packet-stream (shared-memory) interface.

So, which road do we take here? Of course, rather than employing afterwards remote interface we could co-located the driver and application into one component. That, however, would require to harmonize the execution flow and could make debugging more involved. Having independent entities looks more promising, especially since that is what we want to have in the end anyway. Implementing a Gpu session for the Vivante GPU without prior experience could be trying and we already have the DRM API exposed…

Long story short, we dug up the by now ancient topic branch and extracted the Drm session. Going down this road will lead to results faster and afterwards, we should be in a better position if we choose to wrap the DRM API with a more pronounced Gpu session interface.

The driver component implements the Drm::Sesssion_component. If the driver's entry-point gets an Drm request it will unblock the drm_worker task. In its context lx_drm_ioctl will execute the received request:

static void _run(void *task_args)

{

Task_args *args = static_cast<Task_args*>(task_args);

Tx::Sink &sink = *args->sink;

Object_request &obj = args->obj;

while (true) {

_drm_request(sink);

Lx::scheduler().current()->block_and_schedule();

}

}

static void _drm_request(Tx::Sink &sink)

{

while (sink.packet_avail() && sink.ready_to_ack()) {

Packet_descriptor pkt = sink.get_packet();

void *arg = sink.packet_content(pkt);

int err = lx_drm_ioctl((unsigned int)pkt.request(), (unsigned long)arg);

pkt.error(err);

sink.acknowledge_packet(pkt);

}

}

The request, on the other hand, is created by the client component. Like with the Gpu session, there is a similar libdrm back end for etnaviv and likewise the I/O control requests are processed there.

In order to utilize libdrm, we had to update our ported version because it is too old to contain support for the etnaviv driver. We picked the latest stable one, which is libdrm-105. One evident change is that in this version FreeBSD support is more pronounced and since our libc is based on the one from FreeBSD, we are treated as such. We have to keep in mind that I/O controls are differently structured on FreeBSD and Linux, e.g. IN/OUT bits are swapped, and have to accommodate for that when talking to the driver component.

Libdrm comes with its own set of tests for the etnaviv driver and we were eager to try them out. Up to now we have not done much with the driver and past experience tells us that things will get interesting, to say the least, when the first buffer-objects get flying. Additional we checkout the old etnaviv_gpu_tests repository for a low-level but more interesting cube3d test.

First we had to get creative - as we mentioned before, the user land application normally tries to open the device file to obtain a fd with which I/O controls are performed. We changed the code to use the arbitrarily chosen fd 42 and renamed the ioctl function:

CC_OPT += -Dioctl=genode_ioctl

It goes without saying that this is a rather hackish fix for the issue at hand - namely that libdrm relies on the libc and we do not play ball in that regard. (The long-term solution would be to write a VFS-plugin that would encapsulate the Drm'/'Gpu session.)

With the test applications slightly altered it went as anticipated: the DRM_IOCTL_ETNAVIV_GEM_NEW requests came rolling in and the driver component reached some of yet unimplemented dummy functions.

Buffer-object backing store

Now one of the reasons why we pulled in the DRM subsystem was that we did not want to deal with the life-time management of buffer-objects on our own. The DRM GEM relies on other parts of Linux to satisfy its backing store requirements, namely providing swap-able memory. Under the hood the 'shmem file system' is used for that. As we were unsure what baggage would come flying our way when pulling that on in as well, we skimmed the DRM code and luckily there were not that many direct calls to it. In the end we got away with implementing

struct file *shmem_file_setup(char const *name, loff_t size, unsigned long flags);

Depending on where and how in the DRM code a buffer-object is accessed the point and type of entry differs. To be on the safe(r) side we pre-populated all mandatory fields, allocated the backing memory and pointed the address space object to that memory. As this backing memory will most definitely be used by the GPU, we allocated it explicitly as DMA memory. In the past we kept things simple - maybe too simple - and always allocated this type of memory as 'normal non-cache-able' (NC) memory. When dealing with GPUs, where the memory will be used by the GPU as well as the CPU that might be problematic (the one or the other reader might anticipate where we are going with that, we will come back to that later when talking about integrating Mesa). Each buffer-object allocated in such a manner will be backed by its own RAM data space.

The next step was dealing with how the backing memory is accessed via the address space object within the DRM subsystem and the etnaviv driver. Buffer-objects end up getting accessed page-wise, among others to populate the Vivante MMU, via a struct page object. So in

struct page *shmem_read_mapping_page_gfp(struct address_space *mapping, pgoff_t index, gfp_t gfp);

we created a page object for each page and put that into a Genode::Registry. As a registry is just a linked-list under the hood, chances are that this utility is also too simple for the job. For now let us assume that buffer objects do not change so much during run time and are not that larger (again, it might be obvious where we am going with that…).

With allocating buffer-objects settled, the next step is mapping them. Fortunately that is rather straightforward as DRM_ETNAVIV_GEM_CPU_PREP and DRM_ETNAVIV_GEM_CPU_FINI are used by libdrm users to indicate when CPU access is needed. The actual mapping is done via mmap(2) where the virtual offset argument denotes the GEM object. As each object is registered by DRM VMA manager, we can use that to look up the address space that contains the object. From there we can look up the corresponding Genode::Ram_dataspace_capability and return that via the Drm session to the client. This dataspace is then attached to the virtual address space of the client and this address is returned. As we still circumvent the libc we also rename the mmap function to drm_mmap and likewise provide drm_unmap.

Executing the command-buffer

With all the buffer-objects allocated and prepared in the application, the first submit arrived. And the driver component immediately crashed with a page-fault. If you inspect the struct drm_etnaviv_gem_submit data structure corresponding to DRM_IOCTL_ETNAVIV_GEM_SUBMIT closely, you probably notice various pointer fields:

[…] __u64 bos; /* in, ptr to array of submit_bo's */ __u64 relocs; /* in, ptr to array of submit_reloc's */ __u64 stream; /* in, ptr to cmdstream */ […]

The kernel normally accesses these pointer in a safe manner, i.e., makes sure the user-pages are mapped and so on, and copies the data to its own memory via copy_from_user(). As mentioned before, each DRM request is converted into a packet-stream operation and the packet-stream's backing store dataspace is used by the client and the driver. Where the dataspace is attached in the component's address space might differ. Since that is transparent to the client, the addresses used in the I/O control request cannot be used by the driver directly.

Instead we have to marshal the request into the packet-stream. Thereby the data is copied into the packet and each pointer is converted into an relative offset. In the driver the pointer is converted back by adding the offset to the base address of packet (the driver still has to make sure that the pointers only point within the packet-stream packet). The marshaling code is put in lx_drm_ioctl() because we need access to the structures.

Every now and than client wants to synchronize or rather wait for the execution of a command-buffer to finish and issue a DRM_IOCTL_ETNAVIV_WAIT_FENCE I/O control request. The request contains the fence that was obtained when submitting the command-buffer.

Summary

With that all settled, the overall execution functionality is in place, we can allocate objects, submit work and wait for it to finish. Next step is putting actual work on the driver.

—

Concurrently to me fooling around with the DRM driver, my colleague Sebastian updated our Mesa port to version 21.0.0 and rather than using the various tests let us use Mesa to paint something nice to the display.

The next blog post will be about bringing Mesa into the picture.

—

Of course the overall porting process was put in line for this post but there was one or the other time where things got stuck for mundane reasons:

When I initially trans-scribbed the device-tree node to the platform driver I got the IRQ number wrong. Instead of 35 I added 55 to the configuration. In hindsight I am not sure how that happened as however 0x23, which is the number in the .dtb (the .dts contains 3), is twisted it does not become 55. That was a moment were was 100, maybe 110, percent sure I got it right because “of course I checked the IRQ number, it must have something to do with the emulation code” but stand corrected. In the end always those little things that lead to frustration ☺.

Amount of code

Just to put things into perspective, the complete amount of Linux contrib code boils down to

772 text files.

772 unique files.

0 files ignored.

github.com/AlDanial/cloc v 1.81 T=0.98 s (789.6 files/s, 224515.1 lines/s)

-------------------------------------------------------------------------------

Language files blank comment code

-------------------------------------------------------------------------------

C/C++ Header 719 24708 53373 113074

C 41 3748 6944 15876

Assembly 12 147 616 1024

-------------------------------------------------------------------------------

SUM: 772 28603 60933 129974

-------------------------------------------------------------------------------

from which the etnaviv driver only amounts to the following LoC

29 text files.

29 unique files.

0 files ignored.

github.com/AlDanial/cloc v 1.81 T=0.03 s (979.8 files/s, 325336.5 lines/s)

-------------------------------------------------------------------------------

Language files blank comment code

-------------------------------------------------------------------------------

C 15 1311 491 5234

C/C++ Header 14 427 255 1911

-------------------------------------------------------------------------------

SUM: 29 1738 746 7145

-------------------------------------------------------------------------------

The imx8mq_gpu_drv driver component code amounts to

105 text files.

104 unique files.

2 files ignored.

github.com/AlDanial/cloc v 1.81 T=0.10 s (1077.5 files/s, 116263.8 lines/s)

-------------------------------------------------------------------------------

Language files blank comment code

-------------------------------------------------------------------------------

C/C++ Header 84 409 545 3516

C 5 953 120 2235

C++ 12 706 407 1978

make 1 21 8 108

Assembly 1 13 52 43

-------------------------------------------------------------------------------

SUM: 103 2102 1132 7880

-------------------------------------------------------------------------------

from which about 1900 LoC consists of the lx_kit back end implementation.

Josef Söntgen

Josef Söntgen